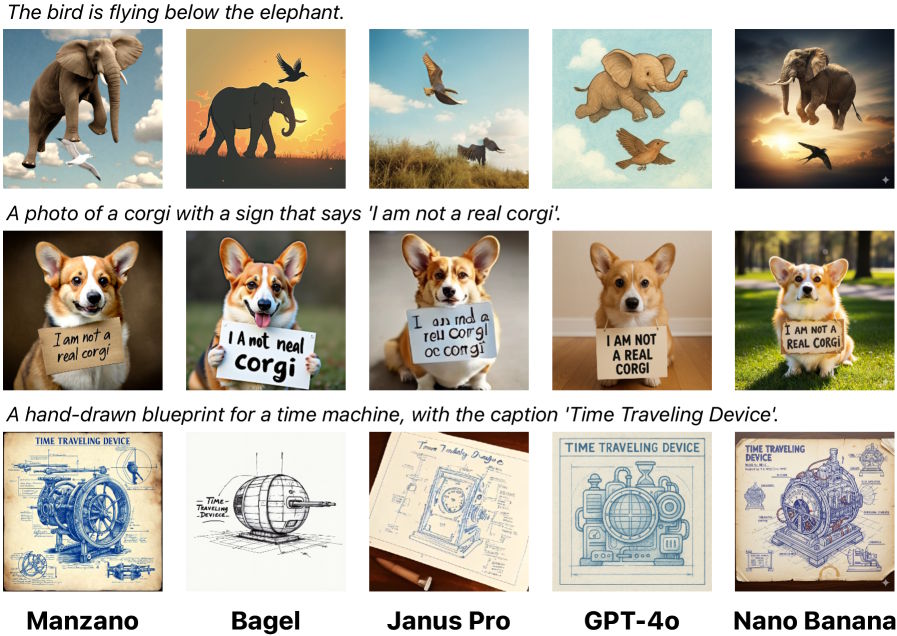

Apple has introduced a research paper on a new AI model for working with images called Manzano. It is capable of both recognizing and generating images, which is usually a challenging task for open models. The company published the results of Manzano’s tests on complex queries, comparing it with systems like Deepseek Janus Pro, GPT-4o, and Google’s Gemini 2.5 Flash Image Generation.

Manzano uses a hybrid image tokenizer that provides two types of tokens. Continuous tokens help the model better understand images, while discrete tokens help generate them. Both streams are formed by a shared encoder, reducing conflicts between image analysis and generation tasks.

The Manzano architecture includes a hybrid tokenizer, a unified language model, and a separate image decoder. Apple has created several versions of the decoder with varying numbers of parameters, allowing it to work with resolutions from 256 to 2048 pixels. For training, researchers used over two billion “image-text” pairs and one billion “text-image” pairs from public and internal sources.

In Apple’s tests, the Manzano model showed better results on tasks involving diagram analysis, documents, and other tasks requiring extensive text work. Versions with more parameters demonstrate higher quality performance compared to smaller ones. Manzano confidently handles image generation tasks, stylization, editing, adding new elements, and depth estimation.

Apple believes that the modular structure of Manzano will allow for independent updates of individual components and the application of different training approaches. The model is not yet available for public use, but the company plans to develop its own AI solutions and use GPT-5 from OpenAI in Apple Intelligence, starting with iOS 26.