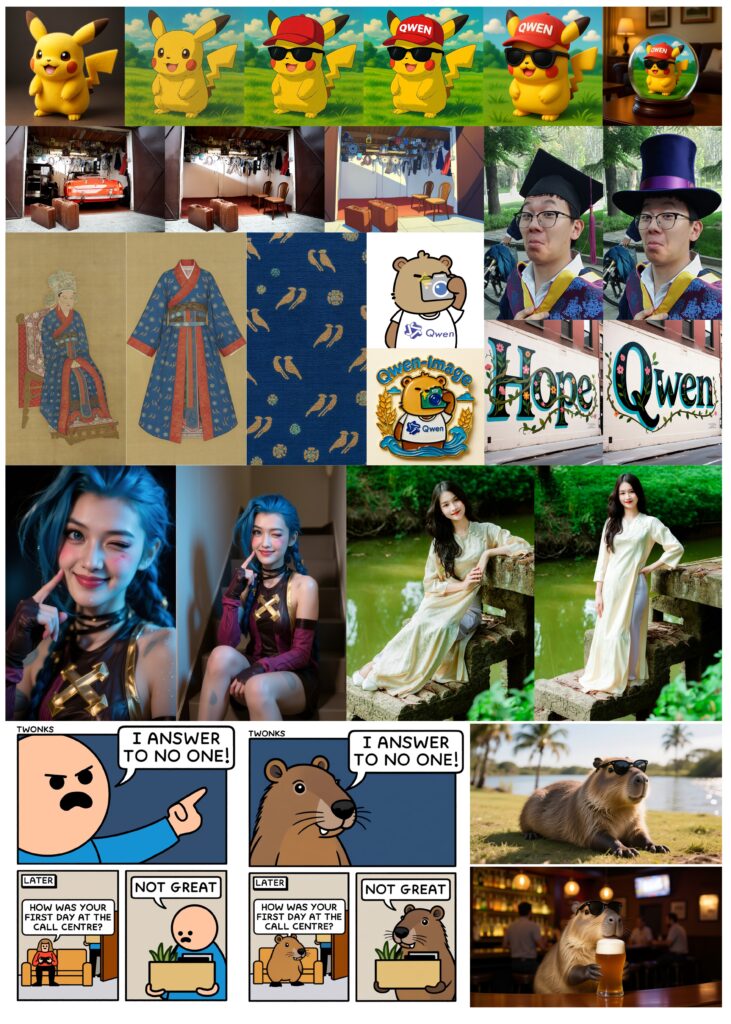

Alibaba introduced Qwen-Image — a new AI model with 20 billion parameters that creates images with high-quality text in various styles. Developers note that Qwen-Image supports bilingual text and easily switches between languages, as well as generates text in different visual contexts — from street scenes to presentation slides.

The model allows not only creating new images but also editing them — changing style, adding or removing objects, and adjusting the positions of people in photos. Qwen-Image performs tasks of classical computer vision, such as assessing image depth or creating new angles while preserving the original content.

The architecture of Qwen-Image includes three main components: Qwen2.5-VL for understanding text and images, Variational AutoEncoder for image compression, and Multimodal Diffusion Transformer for creating the final result. The new MSRoPE technology ensures precise text placement in images, enhancing the quality of text and image combination even at different resolutions.

The Alibaba team built a training dataset without using AI-generated content, focusing on nature photos, design, images of people, and synthetic examples. Additional filters weed out low-quality images, and various approaches to text rendering provide data diversity for training.

In tests, Qwen-Image outperformed several commercial models, such as GPT-Image-1 and Flux.1, especially in creating and editing images, as well as in the accuracy of rendering Chinese characters. The model is available for free on GitHub and Hugging Face, and users can test it in a live demonstration .