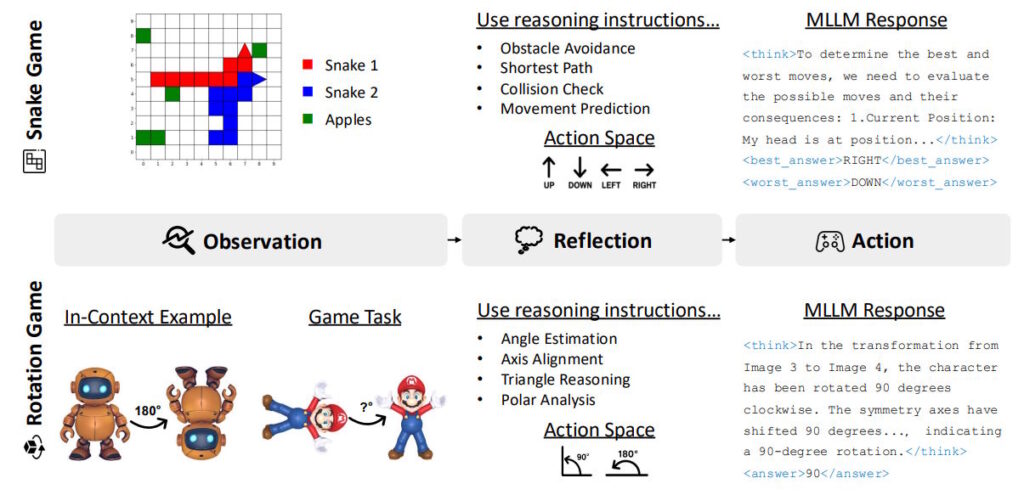

Researchers from Rice University, Johns Hopkins University, and Nvidia introduced a new approach to training multimodal AI using simple arcade games instead of specialized mathematical datasets. They developed the “Visual Game Learning” method based on the Qwen2.5-VL-7B model, where AI is trained by playing variations of Snake and Tetris games.

For each game, researchers created 36,000 training examples with varying levels of difficulty. Training on Snake improved the model’s ability to solve tasks with coordinates and expressions, while training on the rotation game increased accuracy in determining angles and lengths. For 3D objects, Hunyuan3D was used as a data source. After such training, the model better navigates spatial tasks and demonstrates improved move planning skills.

Test results showed that the model trained on games achieved an accuracy of 50.6 percent on mathematical benchmarks, surpassing the specialized model MM-Eureka-Qwen-7B, which showed 50.1 percent. Notable progress was observed in geometry tasks, where scores nearly doubled. In general tests, ViGaL scored 53.9 percent, higher than GPT-4o, but slightly behind Gemini 2.0 Flash.

After training on games, the model was tested on classic Atari games such as Breakout and Ms. Pac-Man, as well as on various tasks in mathematics, geometry, and 3D scene analysis. Here, ViGaL significantly outperformed the base version of the model. Researchers noted that step-by-step hints and a special selection of rewards during training increased accuracy by a few more percent.

The use of reinforcement approach resulted in a 12.3 percent performance increase, while standard fine-tuning reduced accuracy. Increasing the data volume also positively affected the results. Researchers believe that such gaming environments could become an effective way to train AI for developing general reasoning skills.